Secure MLOps platform for compliant Edge AI workflows

Let teams compile and verify models for embedded devices together, with full traceability across their workflow

.png?width=2000&height=782&name=Frame%201433%20(3).png)

Designed for convenient Edge AI development

Integrates model workflows, compliance, and hardware evaluation in one platform

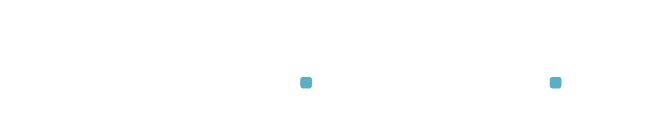

Workflow and Collaboration

Unified development workflow

Connect model compilation, quantization and verification in one shared pipeline.

Built for cross-team collaboration

Give engineers, safety teams, and managers a clear overview of available models, model status and testing progress.

End-to-end process visibility

Ensure every model change and artifact is logged for reliable collaboration and audits.

On-device benchmarking

Run on real edge hardware

Benchmark models directly on phones, IoT devices, and embedded systems.

Scalable compatibility testing

Validate models across many devices to detect performance and integration issues earlier.

On-prem and cloud-based device farms

Use the same workflow with customer owned on-prem hardware as with cloud-based device farms.

.png)

Compliance optimization

Automatic compliance evidence

Get a continuous digital trail of models, builds, tests, and approvals.

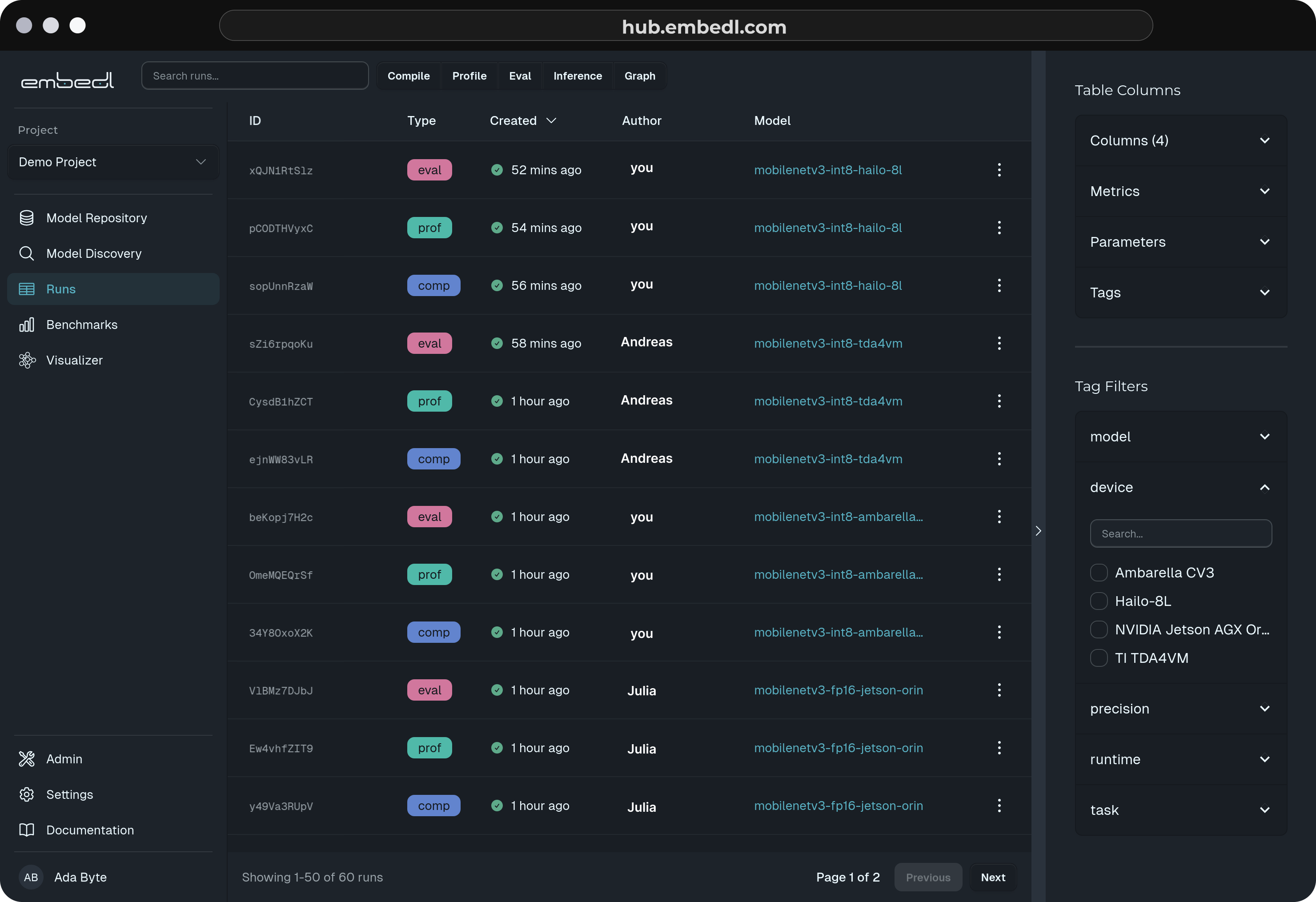

Traceable model lineage

Know exactly how every deployed model was produced, verified, and released.

Audit preparation without disruption

Stop distracting engineers with last minute compliance reporting and documentation.

Flexible deployment options

Deploy Embedl Hub on-prem or use it in the cloud

SaaS

Get started instantly with Embedl Hub running in our fully managed cloud environment.

AWS Marketplace

Deploy Embedl Hub directly inside your AWS account through AWS Marketplace.

Self Hosted Containers

Run Embedl entirely within your own infrastructure using official Docker images.

Built for performance-critical industries

Enables compliant, reliable AI delivery across automotive, defense, and manufacturing

Automotive

Complex supply chains and safety standards make AI verification slow and fragmented. Embedl Hub centralizes model workflows to maintain compliance and accelerate release cycles.

Defense

Security and audit requirements make in-house AI validation rigid and hard to scale. Embedl Hub enables controlled, traceable development inside secured environments.

Industrial Automation

Legacy systems and distributed teams create visibility gaps in AI deployment. Embedl Hub connects model development, testing, and traceability across production lines.

Loved by Devs

-

"Potential to become the de facto standard for edge ML optimization."

.png)

Embedded Engineer

-

“This kind of tooling makes edge AI optimization practical and scalable, especially for computer vision workflows.”

.png)

AI Researcher

-

“Multi-cloud support is a differentiator.”

.png)

Edge AI Engineer

-

“What stood out: Seamless model compilation & benchmarking on remote devices”

.png)

Deep Learning Scientist

-

"Having the ability to deeply inspect the cognitive blocks of our AI models, perform hardware-aware optimisation, benchmark various layers, and deploy models through seamless hardware abstraction is truly game-changing"

.png)

Shubham Shrivastava Head of Machine Learning at Kodiak

Simplify your teams edge AI workflow with Embedl Hub

Frequently Asked Questions

Embedl Hub provides a secure, traceable MLOps platform for edge AI workflows. It centralizes model compilation, on-device testing, validation, and artifact tracking into a single system. This enables teams to deliver production-grade edge AI with full traceability and compliance support—especially in safety-critical environments.

Embedl Hub is designed for organizations building AI systems that run on embedded or edge devices. It is used daily by ML engineers, embedded engineers, and verification teams, and is typically purchased by compliance, validation, or engineering leadership responsible for release and audit readiness.

Every model build, transformation, test run, and deployment artifact is logged and versioned. The system tracks which compiler version was used, which quantization settings were applied, which runtime executed the model, and on which devices it was validated. This creates a reproducible audit trail across the entire compile → verify → analyze lifecycle.

The platform provides artifact lineage, experiment tracking, integration testing across devices, and structured metadata logging. It integrates with tools such as Jira, Jama, and MLflow to connect development work with formal requirements and verification processes. This reduces manual audit preparation and ensures documentation is continuously generated as part of normal development work.

No. The core platform is industry-agnostic and purpose-built for edge AI. It supports compliance-driven workflows common in sectors such as defense, automotive, aerospace, and industrial systems. Industry-specific integrations and add-ons can be layered on top.

No. Embedl Hub orchestrates and tracks workflows but does not replace your compiler stack. It integrates with external backends such as Embedl Compiler, ModelOpt, AIMET, and others. You continue using your preferred toolchain while gaining centralized orchestration and traceability.

Yes. Embedl Hub can be deployed inside your intranet using containerized or virtual machine installations. In this setup, all data, artifacts, and logs remain within your infrastructure. A SaaS option is also available for teams that prefer managed hosting.

Yes. Customers can connect internal device farms for automated testing behind the firewall. The same workflows can also run on supported cloud device farms when appropriate. The CLI and SDK remain consistent across deployment modes.

Embedl Hub is purpose-built for edge AI. It focuses on compilation, hardware compatibility, on-device validation, runtime performance benchmarking, and traceability in environments where updates are difficult and compliance requirements are strict. It is opinionated and designed to work out of the box for embedded workflows, rather than being a flexible but generic ML experiment tracker.

Yes. The platform supports cross-functional collaboration between ML engineers, embedded developers, verification teams, and compliance stakeholders. Stakeholders can access dashboards, model metadata, performance comparisons, and artifact history without needing direct access to development environments.

Embedl Hub includes a model repository that allows teams to publish, discover, and reuse optimized models for specific hardware targets and use cases. In SaaS deployments, Embedl can publish pre-optimized models. In on-prem deployments, organizations can maintain their own internal model catalog.

By enabling early and automated testing across multiple devices, runtimes, and model formats, compatibility and performance issues are detected during development rather than at final integration. This reduces costly rework late in the release cycle.

Yes. The platform is designed for production-grade edge AI systems where updates are infrequent or constrained. It emphasizes validation, reproducibility, and audit readiness before release.